WHAT IT TELLS US

Sensitivity[/b] is a measure of the magnitude of input signal need for the amplifier to produce full output, at maximum volume. This tells us what signal sources the amplifier can handle, and produce full output if required. But it also tells us where the volume control is likely to be set is use and how quickly volume rises when it is turned up. This affects a user’s perception of power.

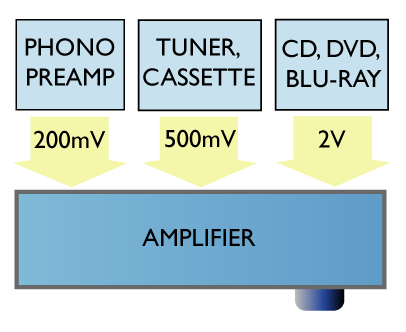

All Compact Disc players produce 2V output maximum, because that’s the standard set for it. Successors such as DVD and Blu-ray also produce 2V. Amplifiers purposed for these sources typically have an input sensitivity[/b] of 400mV on all line inputs, such as Tuner, CD, Aux, Tape (this does not include a Phono input if fitted). It’s just enough to cope with tuners and such like having 500mV output, but not so high as to limit the useful travel and therefore resolution of the volume control. However, sensitivity[/b] is rising to cope with legacy sources such as old tuners and cassette decks, that deliver 100mV-300mV, and especially external phono stages that may barely produce 100mV out. Naim amplifiers use a buffer input stage before the volume control and have an inputsensitivity[/b] as high as 90mV.

With very high input sensitivity[/b] an amplifier will jump up to full volume very quickly as volume is turned up, giving a perception of being powerful, irrespective of true power output. For this reason, as well as broadening compatibility with sources,sensitivity[/b] is increasing in modern amplifiers. Having surplus gain in an amplifier increases complexity though, as well as limiting volume control resolution and in theory at least isn’t a good idea if only silver disc players are used. Adjustable input sensitivity[/b] (gain trim) is provided on some amplifiers (e.g. Arcam) so volume doesn’t have to be readjusted when switching from high output to low output sources.

An amplifier with high input sensitivity[/b] will also deliver a worse noise figure under measurement, because output noise is in most amplifiers determined by noise from the first stage, multiplied up by subsequent gain. When gain is turned down however, this noise falls accordingly and it isn’t in practice audible.

A broadly useful input sensitivity is 200mV. It will cope with most sources. A lower value of 400mV suits silver disc players and modern tuners that typically give 1V output. It is too low for many external phono stages however. An inputsensitivity of 90mV such as that of Naim amplifiers is very high, meaning volume will have to be kept low from CD.

It’s possible to reduce input sensitivity[/b] using Rothwell in-line attenuators. There is no way to simply increase sensitivity[/b] though, if a source is too quiet.

Phono input sensitivity[/b] is much higher than line sensitivity[/b], typically 3mV (at 1kHz) for Moving Magnet cartridges and ten times more (0.3mV) for Moving Coil cartridges. A volume control does not precede this input and it overloads at low levels, so no other sources should be plugged in here. Also, it is equalised by an RIAA network (bass boost / treble cut) so cannot be used with very low output sources either.

Source: http://www.hi-fiworld.co.uk/amplifiers/75-amp-tests/150-sensitivity.html